Reviews

This blog

This blog is something of a vanity project. It has a readership of approximately zero, excluding myself. Sometimes I wonder what the point of having a blog with no readers is. I have no plans to abandon it however.

You might think that the stereotypical Yorkshireman attitude of “I say what I like, and I like what I bloody well say” is exaggerated for comic effect, but it’s true, I read the blog and I really do like what I bloody well say.

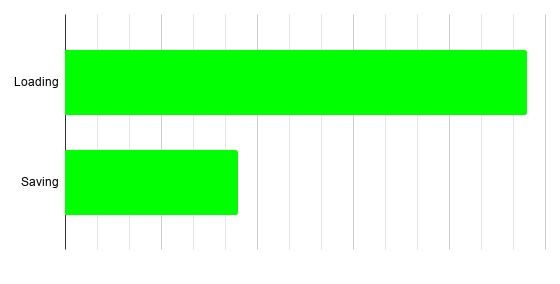

And by read it, I mean, I proof-read it. A lot. And by a lot, I mean, really a lot. I do this because I am not anything like as good at writing as I think I should be. Sure, I like what I bloody well say but I often don’t like how I’ve bloody well said it. Writing even simple concepts down like why Foden doesn’t do well for England takes me a great deal of work to get right, from polishing the phrasing to shepherding my thoughts into a coherent structure. My father is a published author and cartoonist, I clearly did not get the drawing genes but I surely still get the writing ones, right?

Prior to starting the blog, I had read quite a few Tim Hartford books smugly thinking to myself that Tim has such a formulaic worrying style. Thanks to this blog, I am now aware that I’m not fit to click save on his Word documents.

In theory, writing this blog is good practice and I’ll show the kind of improvements my entire readership is praying for. And there’s more. Writing about how to lose at Dominion has helped me improve as a player. For example, I no longer underestimate alt-VP strategies quite as much as I used to do.

There are benefits to writing here, but it is also a vanity project that ironically holds a mirror up to some of my shortcomings. In the spirit of trying to improve the benefits-to-revealed-flaws ratio, I could use it to do what I should have done in the past: annotate, comment on and highlight valuable parts of books I have read as a way of remembering what was in them.

Football Hackers

I had this thought approximately a year ago when I was reading the book “Football Hackers” by Christoph Biermann. Unfortunately, there is little in the book to write home about. It is however, very well written. I read it all the way to the end quite happily despite realising early on that the book was not going to share any notable insights on the titular subject. I can more or less spoil the book by telling you that the owners of Brentford and Brighton made it big by betting. As for any interesting detail on that front, well they use Singaporean bookmakers who are not spooked by large bets, and bet on matches in over/under markets which reduce the three possible outcomes of a football match to two, thereby simplifying calculations.

Insights on the football front are even more elusive. Reading between the lines it sounds like Brentford’s success at corners is due to a focus on making sure they have players where the ball tends to go after the first contact is made. I always found the simplistic “more players in the box” explanation of Brentford’s set pieces success very unsatisfying. If that was the reason, it should be easy for other teams to achieve Brentford’s level of set-piece success, and furthermore, Brentford should suffer a lot of devastating counter attacks. I don’t see either of these things. Likewise, my son’s exasperation at how Brentford consistently stab home the messy rebounds and second balls from corners is explained by what is implied in the book.

How To Win The Premier League

Asides aside, this brings me on to what I wanted to write about, which is the book “How to win the Premier League” by Ian Graham. It is the opposite of “Football Hackers” in that the author was employed by elite football clubs rather than being a journalist on the outside trying to peek in. The result is a book that is less well written but does actually share some insight on data and processes used by the football industry.

The content speaks to me on a couple of levels, I’m interested to know how clubs use data to analyse games and also because I can remember the players he recommended at Spurs and Liverpool. While he had mixed success and infrequent influence at Spurs, he was by extension involved in the infamous transfer committee at Liverpool and that committee was notorious mainly because of its record of mediocre signings.

Explanations and excuses for the early failure at Liverpool feature in some detail, but the book has the same annoying reluctance to tell you what really went on as every other football book I’ve ever read. I’m going to give the author a pass on this front since he was only associated with Spurs and was a hybrid worker (translation: he worked from home a lot) during his time at Liverpool. Don’t read the book if you want to know what Klopp is really like, similarly don’t read any football related book if you want to know what goes on or what anyone is really like.

The book explains xG and describes the current, state of the art, xG models and all the extra considerations that xG models have beyond shot locations. I think xG numbers are basically lies but at least the lies are better now.

One thing that is apparent from reading the book is that his system for rating players is very, very good. The list of successes is extremely impressive. Bale, Salah, Firmino, Mane, Allison, Van Dyke, Coutinho, Robertson, Matip, Fabinho etc. were all recommended by the author to Spurs and Liverpool. There is some good fortune in that Klopp rated many of the players he suggested highly anyway, and in the case of Robertson - recommended as an attacking full back who couldn’t defend - Klopp’s opinion was that he didn’t care about Robertson’s defending ability anything like as much as his attacking ability and Klopp would be prepared to ensure Robertson has cover when defending. Plus there is some considerable fortune in having the talents of Klopp as a manager to get the best out of the signings. All the same, Liverpool have been incredibly successful in the transfer market.

The system Graham uses to evaluate players is basically Karun Singh’s expected threat “possession value” model where the values from are calibrated to Premier League level by adjusting the values based on estimates of how good other leagues are judged by the Dixon/Coles model. I never had a need to worry about the latter but I did try to use the expected threat (henceforth “xT”) model to evaluate my junior football teams.

Considering the aphorism that all models are wrong but some are useful, I have to say I didn’t find xT very useful. The ability level of the kids in my junior football team was such that they got microscopic scores. Similarly the performance levels of my boys were inconsistent and the numbers I got out of xT analysis might as well have been random. We would win comfortably and I would look at the xT scores and it would tell me that, in aggregate, my players made almost no positive contribution whatsoever. It’s very depressing to be told that all the good stuff that results in goals and shots was offset by misplaced passes and failed dribbles.

The book attributes the transfer success to their xT model. I have therefore massively raised my opinion of xT models, clearly they have considerable value.

I liked the book a great deal. Unlike many football books it does actually reveal some of the internal machinations of football clubs. Graham combines an ability to deal with numbers and algorithms with an ability to think critically about the world, a rare combination indeed. He is someone who is able to marshall data and stats and draw from them logical and rational conclusions. I could not say the same about the authors of “The Numbers Game”, Chris Anderson and David Sally, a book which contradicts Graham’s findings and thoughts.

Graham devotes a small chapter to explaining why actually, clean sheets are not more valuable than goals, why Darren Bent wasn’t the best forward in the Premier League at the time (2013), and why corners aren’t worthless. It might be a slightly snippy chapter for Graham to add to his book but it underscores for me his clarity of thought and a desire to have a theoretical basis for the stats and the data.